March 29, 2023

Gambit: A Solidity Mutation Testing Tool for Formal Verification

Author:

Chandrakana Nandi

Chandrakana NandiWhen developers write tests, they often think about common logical errors they may have introduced or edge cases they may not have handled. But what about errors they did not think they may have introduced, such as programming or complex logical errors? How does one write tests to anticipate unanticipated bugs? Mutation testing is a practical solution to this problem. It is a well-studied technique for improving / assessing test suites and has seen significant adoption in large software companies [1]. Typically applied in the context of testing, the key idea is to synthesize faulty versions of a program and check if the existing test suite is able to catch these faulty programs. If not, then the developer expands the test suite with new tests designed to catch the previously uncaught problems. Mutation analysis is the process of measuring the quality of an existing test suite in terms of its ability to kill “mutants” (faulty programs).

In this blog, we discuss our efforts to apply mutation testing in the context of automated verification. We have developed an open-source mutation generator for the Solidity language and integrated it with the Certora Prover, a tool for formally verifying smart contracts. The Certora Prover is accompanied by a declarative, domain-specific language called CVL that allows users to write formal specifications for their programs as “rules”. The Prover then automatically verifies that the program satisfies the specification in all possible cases or it produces a counterexample showing a violation. This procedure can identify critical security bugs before the code is deployed by checking the executable bytecode (you can read more about Certora’s core technology in our white paper). But how does the user know whether the specification was “good enough”? After all, one of the hardest challenges in formal verification is writing the specification itself.

Gambit: An Open Source Mutation Generator for Solidity

As a first step towards addressing this challenge, we built Gambit, an open-source mutation generator for Solidity. It traverses the AST (Abstract Syntax Tree) to identify potential mutation locations in the source and then performs the mutations in the source directly. Gambit is implemented in Rust. The documentation gives a detailed overview of how to use Gambit on Solidity projects of varying complexity. The mutations generated by Gambit can be used to evaluate not only formal specifications but also tests! An example use case we envision is integration with testing frameworks like Foundry, where mutation testing is already a topic of interest. As the documentation shows, Gambit offers users fine-grained control over what contracts and functions to mutate. This helps reduce the generation of unhelpful mutants.

Using Mutations from Gambit for Formal Verification

On top of Gambit, we built a tool that automatically verifies the generated mutants against the original formal specification using the Certora Prover. Its goal is to use the mutants generated by Gambit to assess and improve the formal specification written in CVL. In the rest of this article, we assume that the original program passed verification. To a first approximation, the more mutants a specification kills, the “better” the CVL specification is (redundant and indistinguishable mutants however make this notion more complex in practice) in terms of coverage which, in this article, refers to the percentage of killed mutants. For every mutant, verification can have two main outcomes: either the mutant passes verification or fails it (there are also other scenarios like timeouts which we will not cover here).

When a mutant passes verification, it often provides insights into how to improve the specification by identifying potential incompleteness or imprecisions. Improvements to a specification can have different flavors: sometimes new correctness properties may be required whereas in other cases existing ones may have to be strengthened.

Of course it is also possible that the mutant is benign, e.g., it is semantically equivalent to the original program or simply mutates parts of the code that are irrelevant to the verification.

When verification fails and a mutant is killed, it provides additional evidence that the specification is “good” and able to catch the bug introduced by the mutation. It can also provide insights about coverage that are otherwise difficult to discover! For example, over-approximations are a common trick used to scale formal verification. Mutation testing can reveal that a specification in fact has higher coverage than what an engineer expects — it may catch mutants that the engineer did not think could be caught and this further increases confidence in the specification quality.

Visualizing the Results

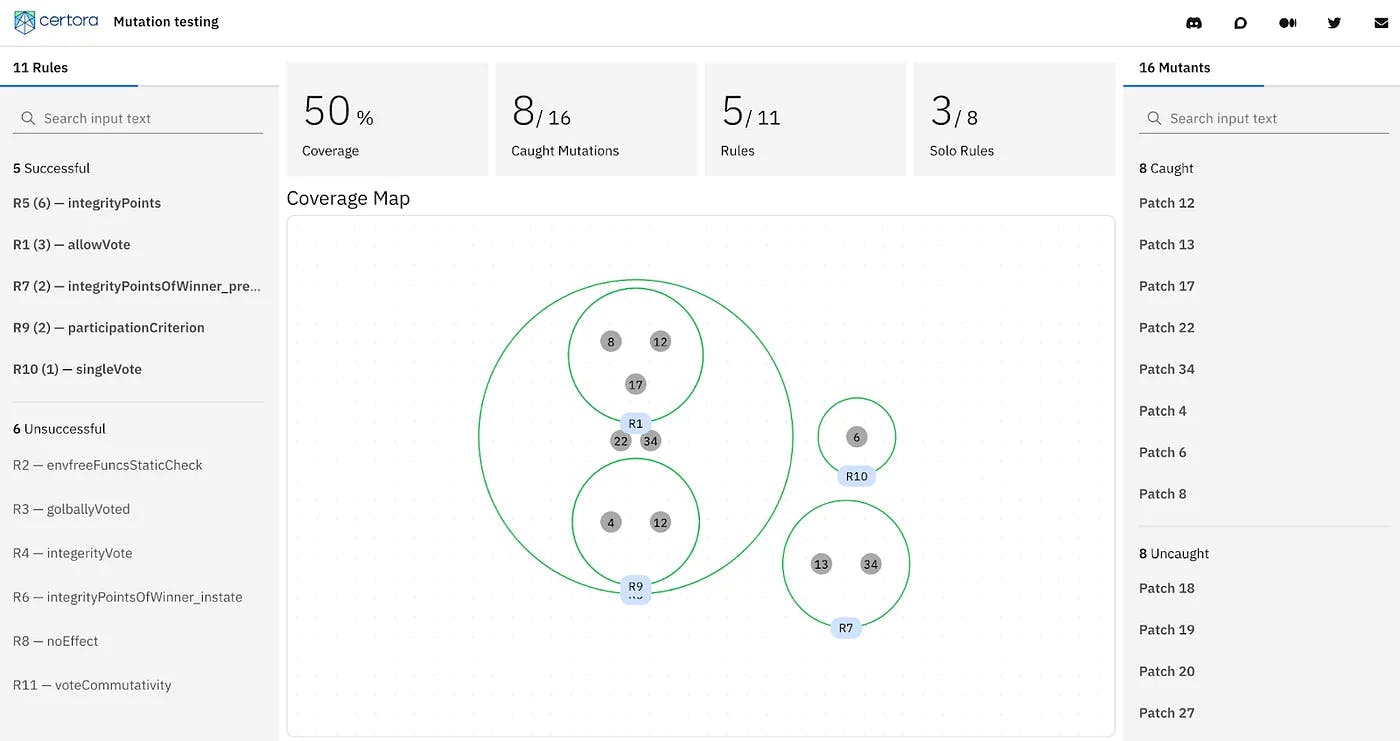

Certora generates an interpretable and user-friendly report to summarize the outcome of verifying all the mutants. The UI has been developed by our collaborators at Evil Martians and is open-source. Any testing / verification tool that generates reports following the UI documentation can use this infrastructure to visualize the results of mutation testing. Figure 1 is an example of the generated visualization for the Borda contract. The full visualization for this example can be found here.

Figure 1. Visualization of the results of verifying mutants

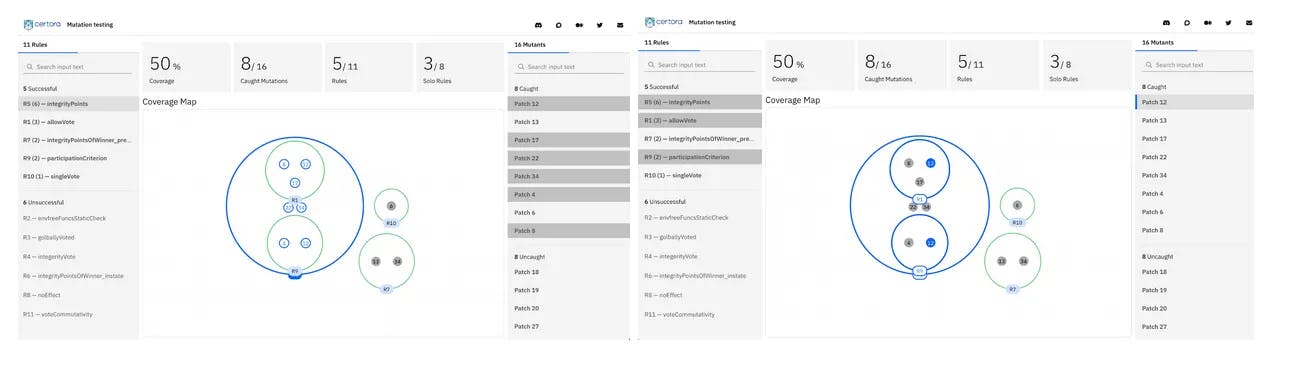

Figure 2. Highlighting rules and mutants

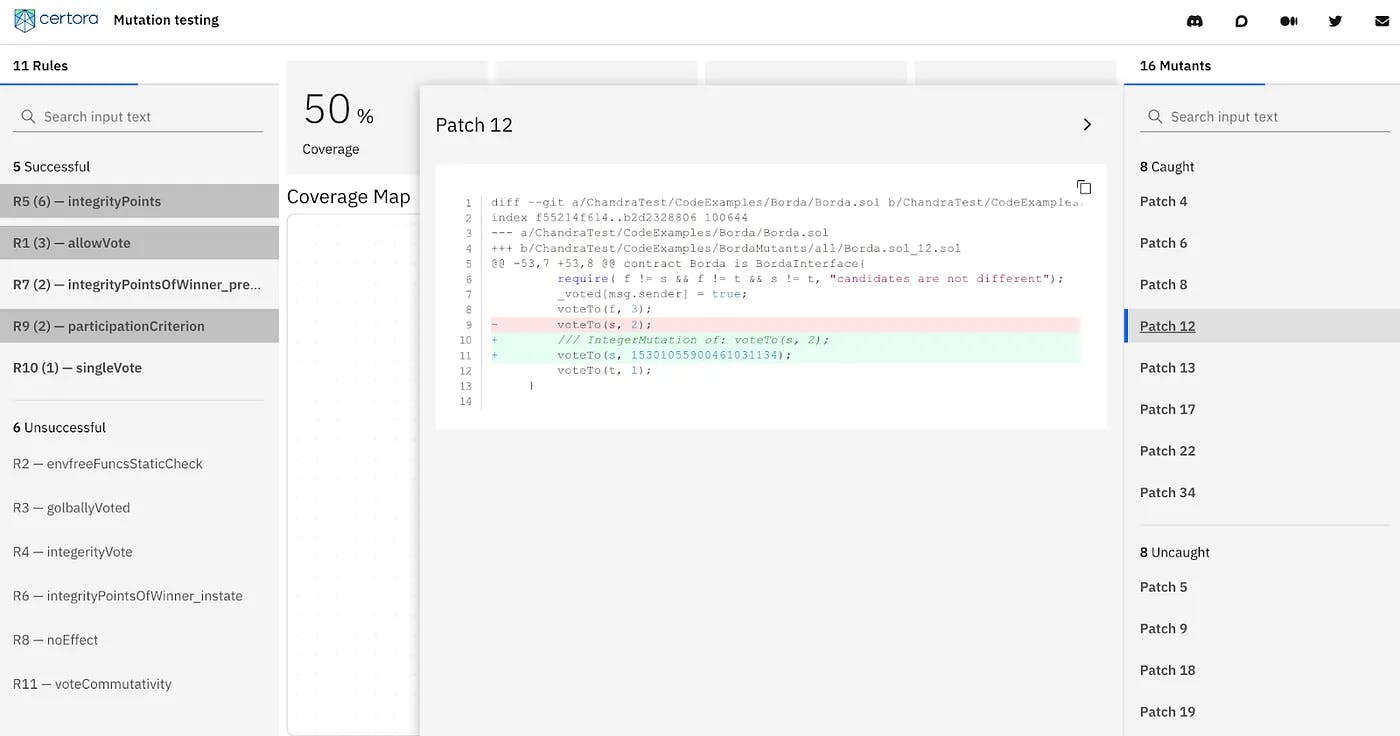

The gray dots represent mutants and the green circles represent specification rules in CVL. A gray dot inside a green circle indicates that the rule corresponding to the green circle caught the mutant. Coverage indicates how many of the total mutants were killed by verification. Selecting a CVL rule shows which mutants it caught and selecting a mutant shows which rules caught that mutant (shown by blue highlights in Figure 2). Figure 3 shows how a user can see the diff of a mutant with respect to the original program.

Figure 3. The diff of a mutant

There are two lists of mutants in the visualization: those caught and those uncaught. Uncaught mutants can be used as a guide to improve the specification. The visualization also shows the fraction of specification rules that actually caught mutants. The “Solo Rules” metric reports on the fraction of mutants that are caught by a single CVL specification rule out of all killed mutants. This metric is a proxy for measuring rule quality; higher is better. If a mutant is caught by multiple rules it indicates an overlap in the behavior that is captured by the rules whereas if a mutant is caught by a single rule it indicates a strong, unique rule with no overlap with other rules.

We are actively working on additional features like better equivalence detection and mutation generation. We hope that with this tool, users will have more confidence in their specifications and the results of formal verification in general.

[1] G. Petrović, M. Ivanković, G. Fraser and R. Just, “Practical Mutation Testing at Scale: A view from Google,” in IEEE Transactions on Software Engineering, vol. 48, no. 10, pp. 3900–3912, 1 Oct. 2022, doi: 10.1109/TSE.2021.3107634.

Acknowledgements. Thanks to the teams at Certora and Evil Martians for their amazing contributions!